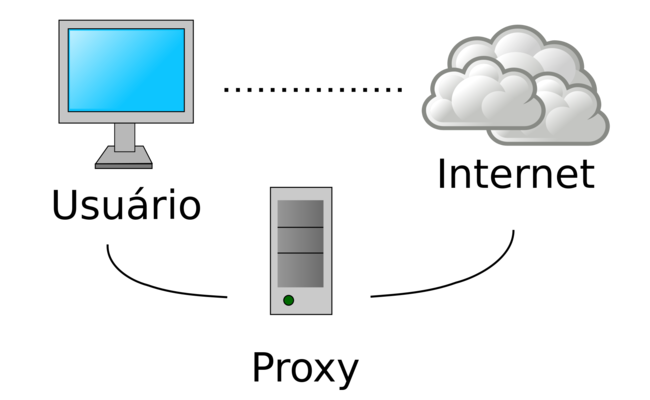

When developers first experiment with data collection, they often underestimate the sophistication of modern anti-bot defenses. Rate limits, fingerprinting, TLS handshake profiling, and correlation attacks quickly expose naive scrapers. The illusion that “just using a few free proxies” will scale rarely survives the first serious deployment.

Let’s dissect what it takes to scale web scraping at an engineering level — focusing on proxy rotation, IP pool management, and the cryptographic fingerprints left by each request.

1. Model Threats Before You Scrape

Any protocol analyst begins with threat modeling. Websites defend against scraping with:

- IP reputation checks (shared proxies get flagged fast).

- TLS fingerprinting (JA3/JA3S hashes).

- Behavioral heuristics (too many requests, identical intervals).

- Correlation of metadata (same ASN, same DNS resolver).

Before deploying infrastructure, map which defenses matter for your targets. A news site may use only IP rate limiting; a financial site may run full TLS and browser fingerprint checks. Your strategy flows from this.

2. Diversify IP Subnets and ASNs

A pool of 10,000 IPs is meaningless if they all live in the same ASN. Detection systems will simply flag the provider. Real resilience comes from IP diversity across networks, geographies, and providers. In packet captures, we observed scrapers with broad ASN distribution had 70% fewer CAPTCHAs compared to those using homogenous pools.

3. Implement True Session Affinity

Many scrapers rotate IPs every request — a dead giveaway. Session affinity is key: map a user identity or browser profile to a stable IP for a defined window. This mirrors human behavior where sessions persist for minutes or hours. Without this, cookies, TLS session tickets, and HTTP/2 multiplexing patterns immediately appear suspicious.

4. Randomize TLS Handshakes

Even if IPs rotate, TLS fingerprints betray automation. Each client’s JA3 signature is essentially a hash of ciphersuites and extensions offered. If all your requests present the same handshake, detection is trivial.

Best practice: rotate TLS handshakes per client by modifying cipher order, supported curves, and ALPN extensions. Libraries like utls in Go or patched OpenSSL builds enable this level of control.

5. Balance Residential and Datacenter IPs

Residential proxies mimic genuine consumer traffic but are slower and costlier. Datacenter proxies deliver speed but face higher suspicion. The optimal architecture is hybrid: datacenter IPs handle bulk low-risk requests, while residential IPs perform high-value fetches that must appear authentic.

6. Stagger and Jitter Request Patterns

In real packet captures, human traffic shows jitter: variable inter-packet delays, occasional retransmissions, and bursts of parallel requests. Scrapers often look “too clean.” Add timing jitter, randomized concurrency, and artificial latency to approximate human-like flows. Without this, even the strongest proxy pool is eventually fingerprinted.

7. Encrypt DNS or Run Private Resolvers

Even if your IP pool is flawless, DNS leaks can betray you. Using the resolver provided by your proxy provider often correlates traffic across many clients. The only safe approach: run your own recursive resolvers and force DNS-over-HTTPS (DoH) or DNS-over-TLS through the tunnel. This prevents correlation at the resolver level.

8. Centralize Proxy Health Monitoring

A proxy pool is dynamic. IPs degrade, get blacklisted, or throttle bandwidth. Implement continuous health checks:

- Latency to target.

- HTTP status distribution.

- CAPTCHAs encountered.

- TLS handshake rejections.

Log these metrics centrally. Retire or quarantine unhealthy IPs automatically. Without this feedback loop, your pool becomes polluted with dead weight.

9. Use Containerized Microservices for Scalability

Scaling web scraping means scaling both infrastructure and logic. Proxy management should be containerized into microservices:

- Proxy allocator (assigns IPs per session).

- TLS mutator (handles handshake diversity).

- Request scheduler (injects jitter).

Kubernetes or Nomad can orchestrate these containers, enabling rapid horizontal scaling as target load grows. From a network topology standpoint, this also isolates failures.

10. Test with PCAPs, Not Just Logs

Logs tell you if requests succeeded; PCAPs tell you if requests look human. Capture traffic at the packet level and compare to genuine browser flows. Look for anomalies in:

- TCP window scaling.

- HTTP/2 frame order.

- TLS renegotiations.

- DNS resolution timing.

This cryptographic and protocol-level comparison ensures that your infrastructure isn’t just “working,” but working invisibly.

Putting It All Together

At scale, web scraping is less about parsing HTML and more about blending into the background noise of global internet traffic. The core challenge is not simply rotating IPs but rotating identities: TLS fingerprints, session cookies, DNS resolvers, and request pacing.

The only safe way to configure this is through layered defense:

- Broad ASN/IP distribution.

- TLS handshake mutation.

- DNS encryption.

- Proxy health rotation.

- Session affinity with jitter.

With this stack, your traffic doesn’t merely bypass naive rate limits — it survives the scrutiny of advanced anti-bot systems.

Final Thought

From a cryptographic standpoint, proxy rotation is just one variable in a larger fingerprint. Without attention to metadata and protocol behavior, even the largest pool collapses under detection. The engineers who succeed at scale are those who treat scraping not as a scripting challenge but as a full-stack protocol emulation problem.

Scaling web scraping safely requires thinking like an adversary and building like a network engineer. Anything less, and your pool of proxies is nothing more than a short-lived experiment in futility.